When the System is the Vulnerability

Nicolas Carlini wrote about why he attacks, his motivations for security research, and his journey through web, browser, and ML systems. But what stood out most to me was his approach to disclosing vulnerabilities.

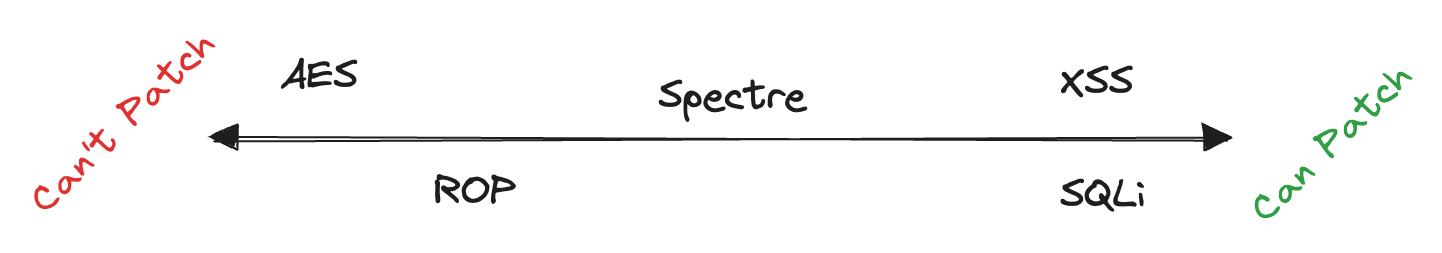

All security vulnerabilities lie on a spectrum of how hard they are to resolve.

Carlini draws a powerful distinction: not all vulnerabilities are created equal. Some are patchable instances-a bug in the code, a logic flaw, a misconfigured flag. Others are unpatchable classes-fundamental issues in the design of the system that no hotfix or update can truly address.

A vulnerability is patchable if the people who built the system can fix it, and in fixing it, can prevent the exploitation of the system. A vulnerability is basically unpatchable if there's nothing that you can do to prevent its exploitation.

For patchable bugs, it makes sense to disclose privately and give developers time to fix things. But for deep, systemic flaws-where no real fix exists-waiting only increases the damage. In those cases, going public quickly is often the most responsible move.

This framing really resonates. Especially in machine learning, where many vulnerabilities aren't in the implementation, but in the architecture, the training data, or the assumptions we've come to accept as "normal." You can't patch a dataset. You can't hotfix a learning algorithm. Sometimes, the attack is the system.

Carlini's spectrum forces a shift in thinking-from treating security issues as bugs in the code to recognizing that some vulnerabilities are bugs in the idea. That's a harder problem.

How do we responsibly secure systems when the system itself is the vulnerability?"